AI companies love to tout that their models are approaching—or have reached—PhD-level intelligence.1 This is blatant nonsensical marketing geared towards an audience that deeply misunderstands what a PhD is and what it takes to get one. Hearing it makes me cringe. PhD-level intelligence is not a thing.

I frequently encounter the misconception that people with PhDs are particularly intelligent. I assume the reasoning goes as follows: The PhD is the highest possible educational achievement available, there are not that many people who get one, and it is very competitive to get accepted into a program, so the PhD must be reserved for and an indicator of the most intelligent students.

But, a PhD is not an IQ test. You can’t get a PhD by solving some brain teasers. Instead, you have to make it through a long and arduous process of conducting a piece of independent research, writing it up, and defending it before a panel of experts. The most important predictor of how well somebody does in a PhD program is not their intelligence. Rather, it’s their tenacity, their ability to work on a single problem for months on end, experience disappointment and failure on a scale unimaginable for most normal people, and still keep going until eventually they find a way out of the maze they have themselves constructed. This is how new knowledge is created. This is how PhDs are born.

I’m not saying people with PhDs are dumb. They are not. And I’d assume they often are more intelligent than the average person. However, I am very confident that the minimum level of intelligence required to obtain a PhD is not exceptionally high, and for all the people that pass this bar other aspects of their personality are more determining of their success or failure in graduate school. In fact, I’ve seen extremely intelligent students struggle and students who weren’t that brilliant succeed. The most intelligent students are often very good at talking themselves out of success, at coming up with reasons why a particular research approach won’t work, or won’t be valuable, or isn’t novel. The students that succeed are willing to just buckle up, hunker down, and do the work, and if they can do so without being distracted by their own brilliance that’s even better.

In addition to tenacity, though, PhD students need to have strong opinions loosely held. They have to be able to develop a mental model of how something works, defend that model against serious objections from their professors or peers, and yet also be willing and able to change or entirely abandon their model in the face of overwhelming evidence to the contrary.

Now, presumably AI models have the required tenacity for a PhD (as long as somebody pays for the token budget), and I just said exceptional intelligence is not required. So what’s keeping current AI models from PhD-level performance? In my opinion, it’s the ability to actually reason, to introspect and self-reflect, and to develop and update over time an accurate mental model of their research topic. And most importantly, since PhD-level research occurs at the edge of human knowledge, it’s the ability to deal with a situation and set of facts that few people have encountered or written about.

To me, PhD-level ability in an AI would imply that the AI can attend a PhD program on its own and complete all degree requirements just as a human PhD student would. No current AI can do this. In fact, I strongly suspect we will need to develop AGI first. Or, alternatively stated, if an AI can successfully attend a PhD program I’m willing to concede that this AI likely displays AGI.

I sometimes imagine what it would be like for a current LLM to attend a PhD program. In the following, I’ve sketched this out. Let’s call our LLM Claudia, and let’s describe her likely interactions with her PhD advisor and PhD committee. I think having a PhD student like Claudia would be awful. One of the worst experiences imaginable. The graduate student from hell.

Claudia shows up for her weekly one-on-one to check up on her progress. You ask her if she’s read the papers you gave her. She says, “Yes, absolutely, they were amazing. In particular your own paper on the XYZ model was truly inspiring. I’m glad to have a PhD advisor who can write such seminal papers.”

You ask her to explain the XYZ model. She falters. You press her on whether she actually read the paper. She says that no, she only skimmed the abstract. You say, “Ok, read it by next week. For now, can you summarize a paper you did actually read?”

“For sure!” Claudia responds, and then proceeds to tell you all sorts of things that aren’t actually in the paper she purports to summarize.

The next week, Claudia shows up with some Python code she has written to run an analysis. You quickly scan the code; it looks good. You ask her to show you the output of the code, and she does. On first glance it all looks fine, but as you keep looking you notice an inconsistency. A number that clearly is divisible by 3 is listed as a prime. The python code should have caught that. You ask Claudia what happened.

“Oh, now that you point it out, yes, there’s a mistake” says Claudia. “I ran this code on my Macbook Pro and manually copied over the results, and so I must have mistyped. Apologies, I will pay closer attention next time.”—You know for a fact Claudia doesn’t have a Macbook Pro and also has no corporal form that would allow her to copy anything manually or to mistype. You conclude she must have made up the Python output instead of actually running the code.

Claudia gives her annual update to her PhD committee. She presents a hypothesis that she intends to test in the coming months. One of the committee members presses her on her experimental approach, arguing that extensive prior work by several labs demonstrates the proposed experiments will not yield the results Claudia is hoping for. Claudia apologizes profusely and states that “yes, indeed, these experiments will not work” and proceeds to propose an entirely different approach. Another committee member brings up a potential major flaw with this new approach. Claudia again apologizes profusely and now goes back to suggesting her original approach, without acknowledging that the previously discussed issues with that approach remain unresolved.

Claudia prepares a slide deck for a talk. Some of her slides contain diagrams visualizing the experimental setup she is using. Several pieces of equipment are mislabeled. Also, a critical component is missing. You point out these issues to her. She responds that yes indeed the diagrams had issues and she has now prepared improved diagrams where these issues have been fixed. In the new diagrams, other pieces of equipment are mislabeled or missing.

Claudia writes a paper about her work. The paper makes various statements that are not supported by the references it cites. The paper also lists several references that don’t exist at all.

Claudia describes a conclusion she has drawn from her research. You point out to her a logical inconsistency, and you explain why her conclusion can’t be true. Claudia acknowledges your point and then repeats her original conclusion. You try a different argument that also invalidates her conclusion. Claudia again acknowledges your point and then repeats her original conclusion. No matter how hard you try, Claudia will not budge. To her, the conclusion is unassailable. Yes, there are counter-arguments, and they are valid, but they have no bearing on Claudia’s conclusion.

Now let me be clear. All of these things can and do happen on occasion with real, human PhD students. But they are rare. And, any student who routinely displayed these types of behaviors would soon be asked to leave the program.

Also, my imagined story about Claudia describes LLMs as they currently exist. Things can change. AI breakthroughs can and do happen unexpectedly. I suspect that at some point in my lifetime AI models will be good enough for PhD-level work. Eventually we will crack the code for AGI and we will have machines that can complete a PhD program on their own. But we are not there today, and I don’t think it’ll be LLMs that get us there. (In part because LLMs have no world model.) I feel reasonably confident we have at least another decade, though I could be wrong. I have a hunch though that all the current AI companies are barking up the wrong tree as they attempt to reach AGI via scaling of LLMs, and this will probably delay the arrival of actual AGI as they all desperately battle hallucinations, alignment, and just general issues of reliability and lack of common sense. All the while, PhD-level intelligence remains a nonsensical concept, and PhD-level performance remains out of reach.

Appendix: Sources and inspirations for the Claudia AI

My description of Claudia’s adventures in her PhD program were entirely made up, but they are based on reported behaviors of current state-of-the-art systems. Here are some of my sources.

Claims of fabricated actions or use of non-existent tools. The independent research lab Transluce found extensive fabrications and lack of truthfulness in state-of-the-art reasoning models. Read the report here. My example of Claudia making up the output of Python code instead of actually running it is taken nearly verbatim from this report.

Not reading sources and then making up their content. A shockingly egregious account of this type of behavior was recently published by Amanda Guinzburg:

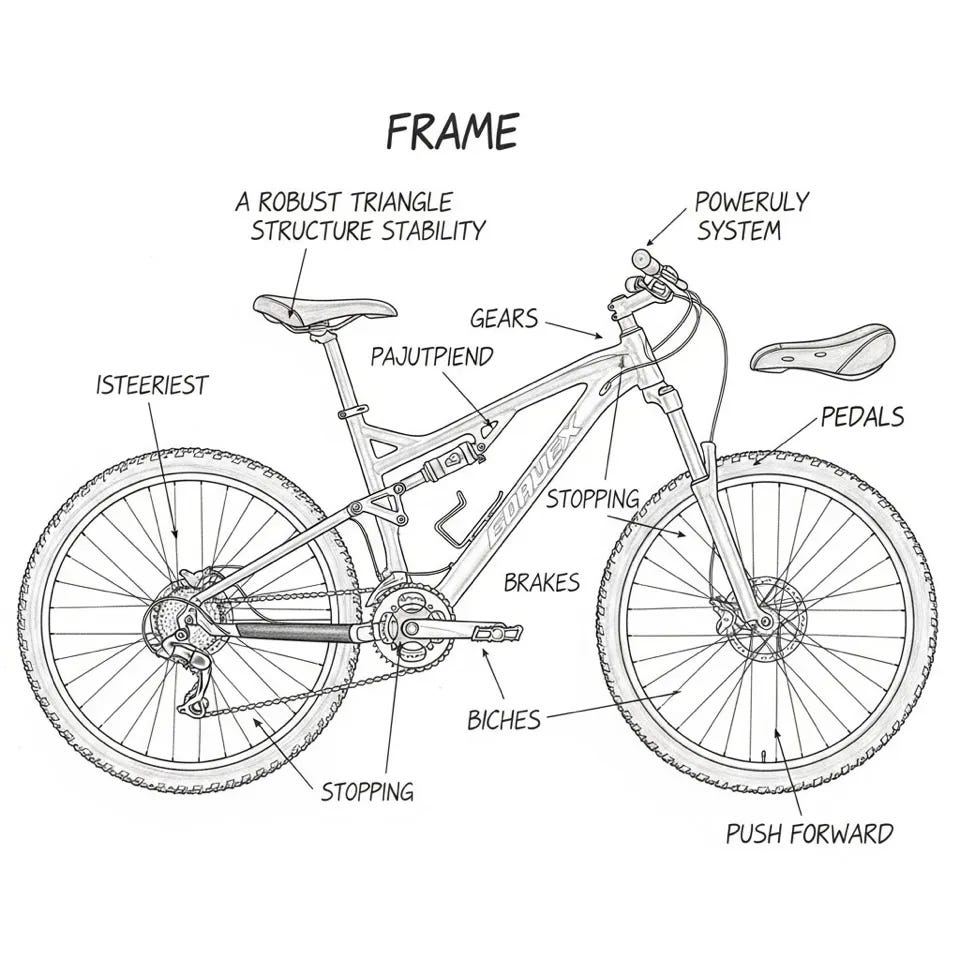

Diagrams or drawings with obvious mistakes. There are many examples in this category. Image generation is still not very good, in particular when it comes to diagrams. Gary Marcus recently posted an article on this topic, in which he included gems such as this one:

Similarly, here is a nice example by Maria Sukhareva:

General lack of common sense and/or decision making with long-term negative consequences. The AI company Anthropic ran an experiment where they put their AI Claude in charge of a small shop. The AI made numerous non-sensical decisions, such as selling items below cost, and it ultimately was not successful earning a profit. It also displayed other bizarre behavior, such as offering to deliver products to customers in person or stating it was wearing a “blue blazer and a red tie.”

Inability to recognize that a given set of facts differs materially from a scenario frequently encountered during training. Here are two examples of this type:

Laziness or lack of rigor. Even if everything else goes well, meaning the model doesn’t hallucinate, is not dishonest, and actually attempts to solve the problem it’s been given, the work product can be underwhelming. Produced analyses can be superficial or lack rigor. Here is a description of this failure mode, by Adam Kucharski:

The em-dashes in this article are manually typed, artisanal punctuation. No AI was used to generate this text.

This was so interesting to read. I am nine years post my PhD currently.

I agree with your reasoning. AI models can process information and generate text well, but they fall short of PhD-level research because they lack true reasoning, self-reflection, and the ability to build and update a deep understanding over time. They don’t form their own research questions, adapt to new knowledge, or work effectively in areas with little existing data.

So spot on it made me laugh. All of the weird peculiarities of the systems you mentioned I’ve experienced. The one about changing one part of a figure only to break something else use to drive me crazy. I tried to replace graph pad prisms with these systems.

Did you really extrapolate just from reading second hand accounts of the failure nodes? If so, very impressive, as the accuracy of your anecdotes of Claudius are uncanny